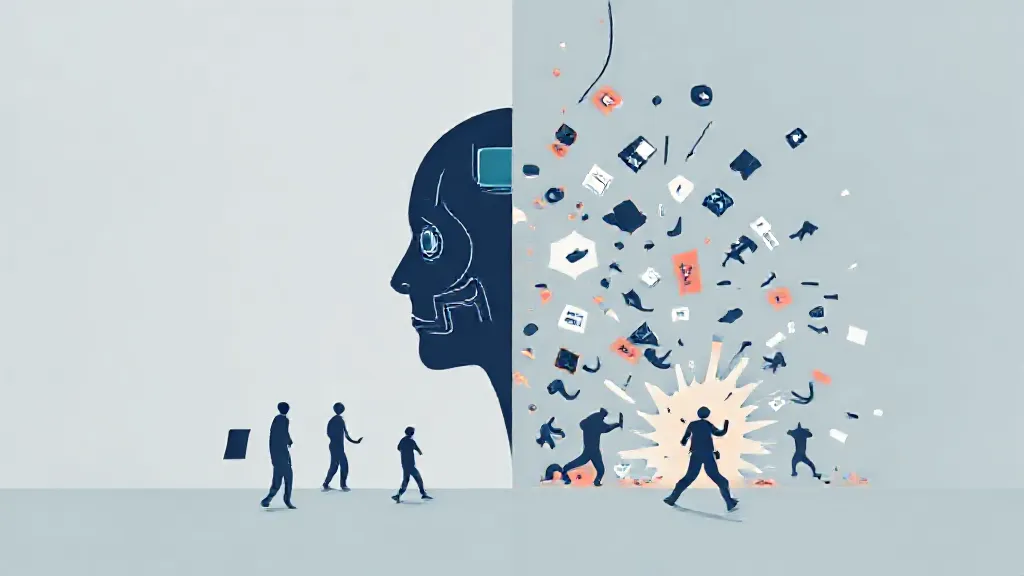

As artificial intelligence (AI) continues to permeate various sectors, the implications of its decision-making processes have come under scrutiny. AI systems are designed to analyze vast amounts of data and make decisions more quickly and efficiently than humans. However, when these systems make mistakes, the consequences can be significant, leading to questions about accountability, ethics, and the reliability of AI technologies.

Understanding AI Decision-Making Errors

AI decision-making errors can occur for numerous reasons, including biased training data, flawed algorithms, and unforeseen scenarios. For instance, if an AI system is trained on historical data that reflects societal biases, it may perpetuate those biases in its decision-making. A notable example is the use of AI in hiring processes, where algorithms may favor candidates of a certain demographic based on biased data, leading to discrimination.

Real-World Examples of AI Failures

Several high-profile cases illustrate the potential fallout from erroneous AI decisions. In 2018, a self-driving Uber vehicle struck and killed a pedestrian in Tempe, Arizona. The vehicle's AI misidentified the pedestrian, leading to a tragic outcome.

Such incidents raise concerns about the safety of autonomous systems and the responsibility of developers and companies in ensuring their reliability.

The Ethical Dilemma of AI Mistakes

The ethical implications of AI errors are profound. When an AI system fails, it raises questions about who is accountable—the developers, the users, or the AI itself? This dilemma is particularly pressing in sectors like healthcare, where AI is increasingly used for diagnostics and treatment recommendations.

A wrong diagnosis made by an AI could lead to severe health consequences, prompting discussions about the ethical responsibilities of AI creators.

Legal Ramifications of AI Errors

As AI technologies evolve, so too does the legal landscape surrounding them. Current laws often struggle to keep pace with the rapid advancements in AI.

In cases where AI makes a wrong decision, such as in autonomous vehicles or financial trading systems, determining liability can be complex. Legal frameworks may need to adapt to address these challenges, potentially leading to new regulations and standards for AI development and deployment.

Mitigating Risks Associated with AI

To address the risks of AI decision-making errors, organizations can implement several strategies.

Regular audits of AI systems can help identify and rectify biases or flaws in algorithms. Additionally, incorporating human oversight into AI decision-making processes can serve as a safeguard against potential errors. By ensuring that humans remain in the loop, organizations can better manage the risks associated with AI technologies.

The Role of Transparency in AI

Transparency is crucial in building trust in AI systems. Users and stakeholders must understand how AI systems make decisions to assess their reliability. Companies can enhance transparency by providing clear explanations of the algorithms used and the data on which they are trained.

This openness can help mitigate fears surrounding AI errors and foster a more informed public discourse about the technology.

Future Directions for AI Decision-Making

Looking ahead, the future of AI decision-making hinges on continued advancements in technology and ethical considerations. Researchers are exploring ways to create more robust AI systems that can better handle ambiguity and adapt to new situations.

Additionally, interdisciplinary collaboration among technologists, ethicists, and legal experts will be essential in shaping the development of AI technologies that prioritize safety and accountability.

Conclusion: Navigating the AI Landscape

As AI becomes increasingly integral to various aspects of life, understanding the ramifications of its potential errors is vital. Stakeholders must work together to navigate the complexities of AI decision-making, ensuring that the benefits of technology are harnessed while minimizing risks.

By fostering a culture of responsibility and transparency, society can better prepare for the challenges posed by AI, ultimately leading to safer and more effective applications of this transformative technology.